One of the software features that Amazon rolled out in today’s launch of the new Kindle Fire and Kindle Paperwhite was “X-Ray”, an umbrella term for reference and lookup on both texts and movies. The X-Ray name seems to encompass a number of different user interface affordances, some of which rely upon explicit metadata, and others which work on implicit — or latent — patterns. I’m interested in the latter of these: how Amazon is exposing some hidden structures of text, and what that might mean for folks who are interested in text mining.

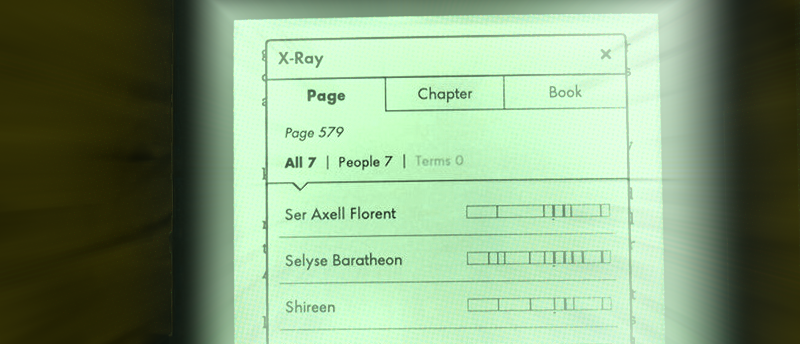

When I saw the demo of X-Ray for Books in The Verge’s liveblog of the Amazon event, I was captivated by an image that showed a kind of “heatmap” of character saturation over the course of a book:

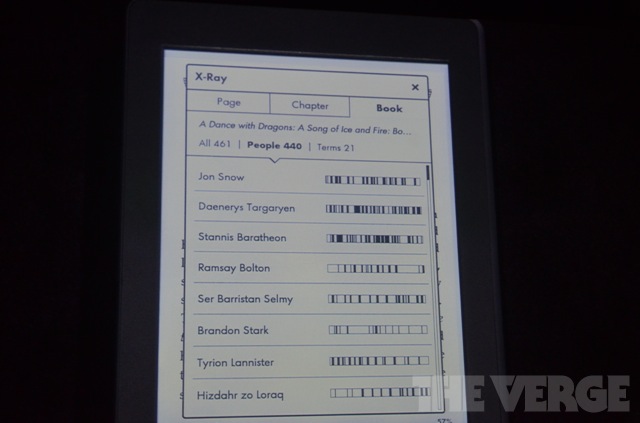

This screen, shown on the new Paperwhite touch-enabled Kindle, shows something really neat: words which are the names of characters are recognized as such, and the frequency of each is mapped to a thin linear rectangle, presumably stretching from the start to the end of the book. (In fact, the scope of the visualization is selectable via the buttons: Page, Chapter and Book.) More black bars towards the end means a character shows up in the last part of the book, and vice-versa.

Of course, we don’t know very much about how Amazon is implementing this feature. Does the visualization of, say, Ramsay Bolton imply that he’s absent from the last fifth of the book? Or just that he doesn’t say very much? We can imagine a couple ways of going about preparing the underlying data:

1. A real human being goes through and marks each page for the presence of a character.

2. A robot tries to guesstimate, based on such tricks as linking “Ramsay Bolston” with “Ramsay” and “he” in the following paragraphs

3. Some hybrid of these — robotic guesses followed by human spot-checking.

There are lots ways in which such a simple visualization is problematic (is the whale mostly absent — or undeniably and constantly present — throughout Moby Dick?), but as a first-order approximation this kind of visualization works well, especially when main characters are lined up all on one screen as shown above. Patterns immediately become clear — you can imagine how the Ghost and Fortinbras would bracket Hamlet.

How does Amazon choose what terms to show for this? You don’t want to show the distribution of the word “the” in most books, and lots of other common words would result in banal visualizations. This problem in machine learning is called Named-Entity Recognition, and Amazon’s marketing material provides some hints at their approach:

For Kindle Touch, Amazon invented X-Ray - a new feature that lets customers explore the “bones of the book.” […] Amazon built X-Ray using its expertise in language processing and machine learning, access to significant storage and computing resources with Amazon S3 and EC2, and a deep library of book and character information. The vision is to have every important phrase in every book.

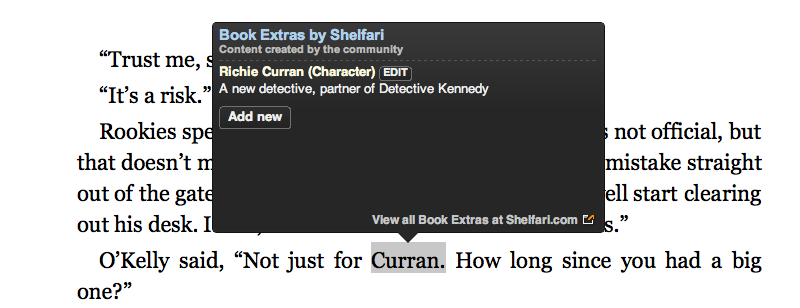

One simple Named Entity Recognition technique (in English) is to take capitalized words and see if they match against common lists of proper names, places and other kinds of things — if so, make them into links and see if reference sources such as Wikipedia has content for them. The addition of Shelfari (an Amazon acquisition which aggregates info specific to books, including characters and physical locations) to the stable of lookup sources is a great move, because its more likely to have fancruft data that Wikipedia deems non-notable (the names of Bilbo Baggin’s distant cousins, etc.)

What’s really interesting about this feature, though, is that it’s not really new at all: X-Ray for Books was introduced along with the first touch-screen Kindle way back in September 2011. Check out this video excerpt from that event, which shows the heatmap visualizations, as well as how X-Ray loads in Wikipedia and Shelfari content:

In a way, it’s unsurprising that this X-Ray feature debuted with the Kindle Touch: no other hardware Kindle device has the kind of user interaction model that freeform querying requires. Although even first-generation Kindles let you highlight passages and save simple excerpts as clippings, these were line-based, not at the level of individual words. Software-only Kindle implementations, such as the iOS app, do not presently offer X-Ray — only dictionary lookups, together with auxiliary buttons for Google and Wikipedia. The Kindle App for Mac OS X offers “Book Extras by Shelfari,” but no frequency visualizations of any kind. (Kindle for Android offers only a dictionary, Kindle for WebOS offers not even that.)

And the restriction of Book X-Ray to the Kindle Touch — a kind of “stealth rollout” — helps make my surprise at a feature that had been around over a year a little less embarrassing. The Touch, with its awkward infrared-based touch sensor, deep bezel, and slow page-turning performance, can hardly have been the most popular device sold. Marco Arment’s review of several Kindles and clones rated the Kindle Touch pretty poorly — and, interestingly, made no mention of the X-Ray feature. The technology was hiding in plain sight, in one of the more awkward of the Kindle cousins.

And the restriction of Book X-Ray to the Kindle Touch — a kind of “stealth rollout” — helps make my surprise at a feature that had been around over a year a little less embarrassing. The Touch, with its awkward infrared-based touch sensor, deep bezel, and slow page-turning performance, can hardly have been the most popular device sold. Marco Arment’s review of several Kindles and clones rated the Kindle Touch pretty poorly — and, interestingly, made no mention of the X-Ray feature. The technology was hiding in plain sight, in one of the more awkward of the Kindle cousins.

Given the importance of touchscreens to this kind of textual exploration, however, I am surprised that Amazon’s other touch-enabled Kindles — the original Kindle Fire — didn’t have this Book X-Ray feature. I’ve never used a first-generation Fire myself (reviews have been pretty bad) but support forum posts confirm that it does not have the X-Ray feature for books. The comments in this Verge article about the Fire software changes seem to suggest nobody knows if first-gen Fire hardware will see the revamped software — and with it the X-Ray features that come along with it.

Even Kindle Touch owners themselves could be excused for overlooking the feature. The hardware Kindles are known for excellent screen readability, amazing battery life, and a great book catalog — using them as data-mining tools may be too much of a leap. In fact, a recent thread about X-Ray on the Kindle Touch on Amazon’s support forum seems to show a mixture of confusion and disinterest about the X-Ray.

But with the large number of new hardware Kindles that are now available from Amazon with touch interfaces (only one legacy $69 device is left without touch capabilities, if I understand things correctly), we can expect the X-Ray feature to — perhaps — gain more visibility. If Amazon ever consistently deploys Book X-Ray across all of its hardware and software platforms with arbitrary term selection (via mouse or finger) — desktop and mobile apps inclusive — then there’s the possibility that students reading novels on the Kindle platform will have access to an easy and engaging first glimpse into text mining. And not just on “classic” texts that we teach in literature seminars — from Amazon’s examples, Book X-Ray may well first be deployed on popular contemporary fiction. Although term frequency by itself may seem like a simplistic thing to focus on, in Ted Underwood’s words:

…you can build complex arguments on a very simple foundation. Yes, at bottom, text mining is often about counting words. But a) words matter and b) they hang together in interesting ways, like individual dabs of paint that together start to form a picture.

Kindle Book X-Ray may be the first chance many people get to hold a digital humanities paintbrush.